Reality Goggles

→ Experiment of AI-supported X-ray glasses that recover windows in tunnels.

Liminality in urban planning

We all know the voyage as a moment of liminality & transition. Worries are quickly forgotten before they pop into our minds again just before the plane lands. On long train journeys, we can enjoy the view out of the window, which gives us an insight into the course of our journey. In urban areas however, we experience architectural pragmatization resulting in serialization. One example of this is the subway, which has windows through which you can only see the wall of the tunnel. Subway-stations also serialize the city architecturally and abstract it into names on signs.

The X-ray vision vision

We wondered how it must feel to look up while sitting in the subway and, through fancy X-ray technologoy, see the world above you. All the pipes running through the ground, secret cellars and undiscovered worlds but also the well known city of vienna up above. One could regain a sense of travelling. Of course X-ray vision really stays a vision. For now. But after seeing Paragraphica by Bjørn Karmann we thought with the help of a little bit of generative AI we could at least try to build a tangible prototype that attemps this with todays tech.

Functional prototype

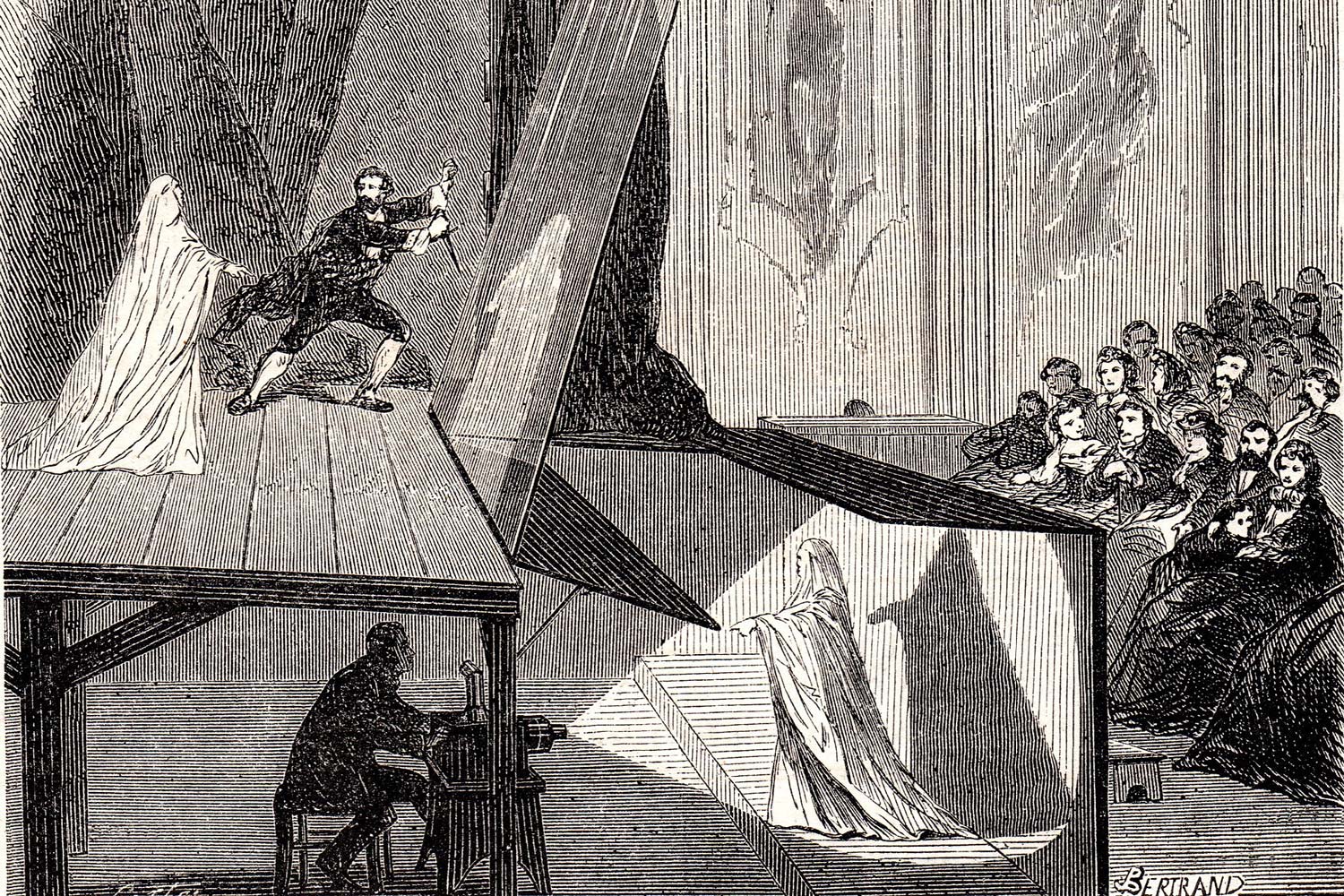

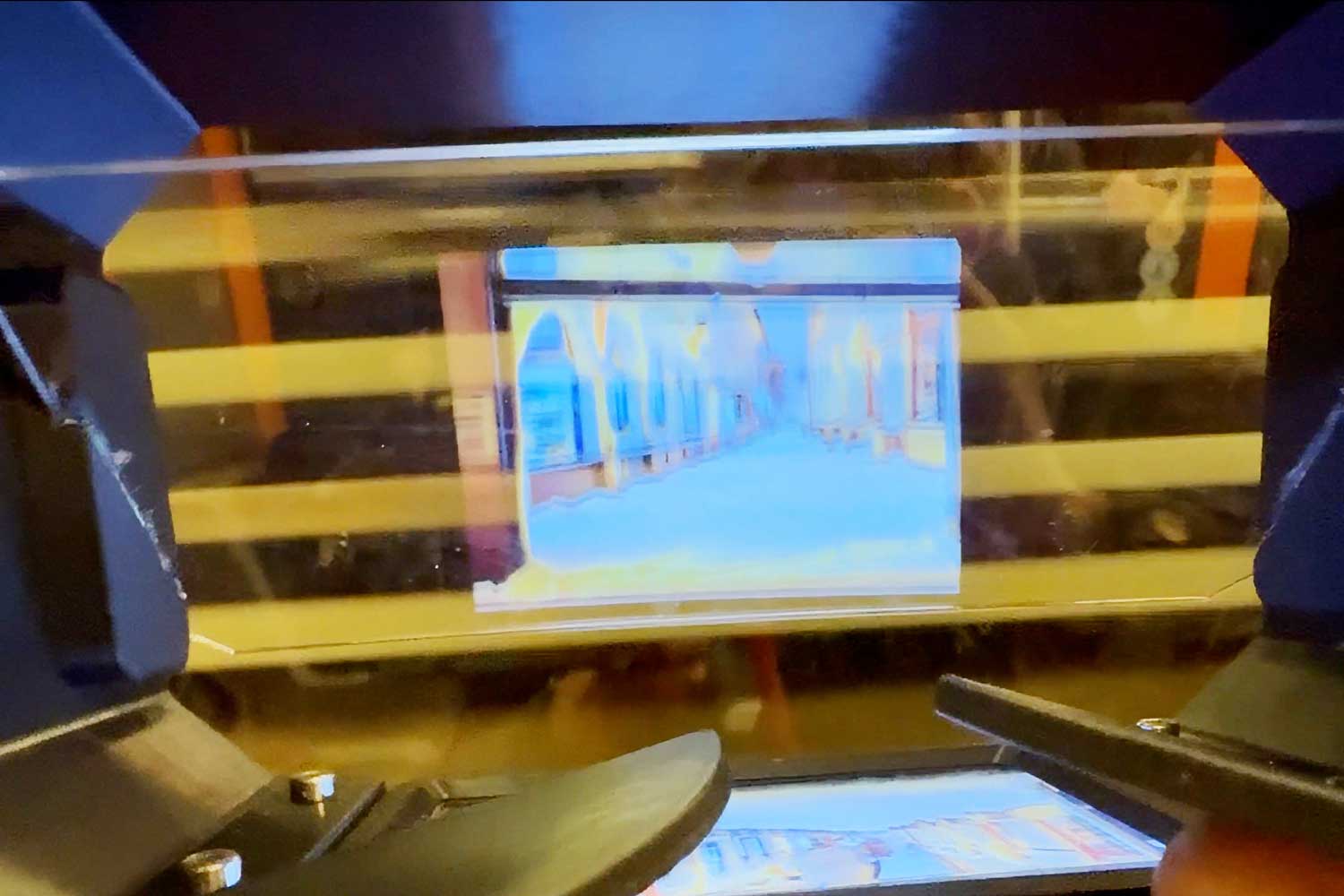

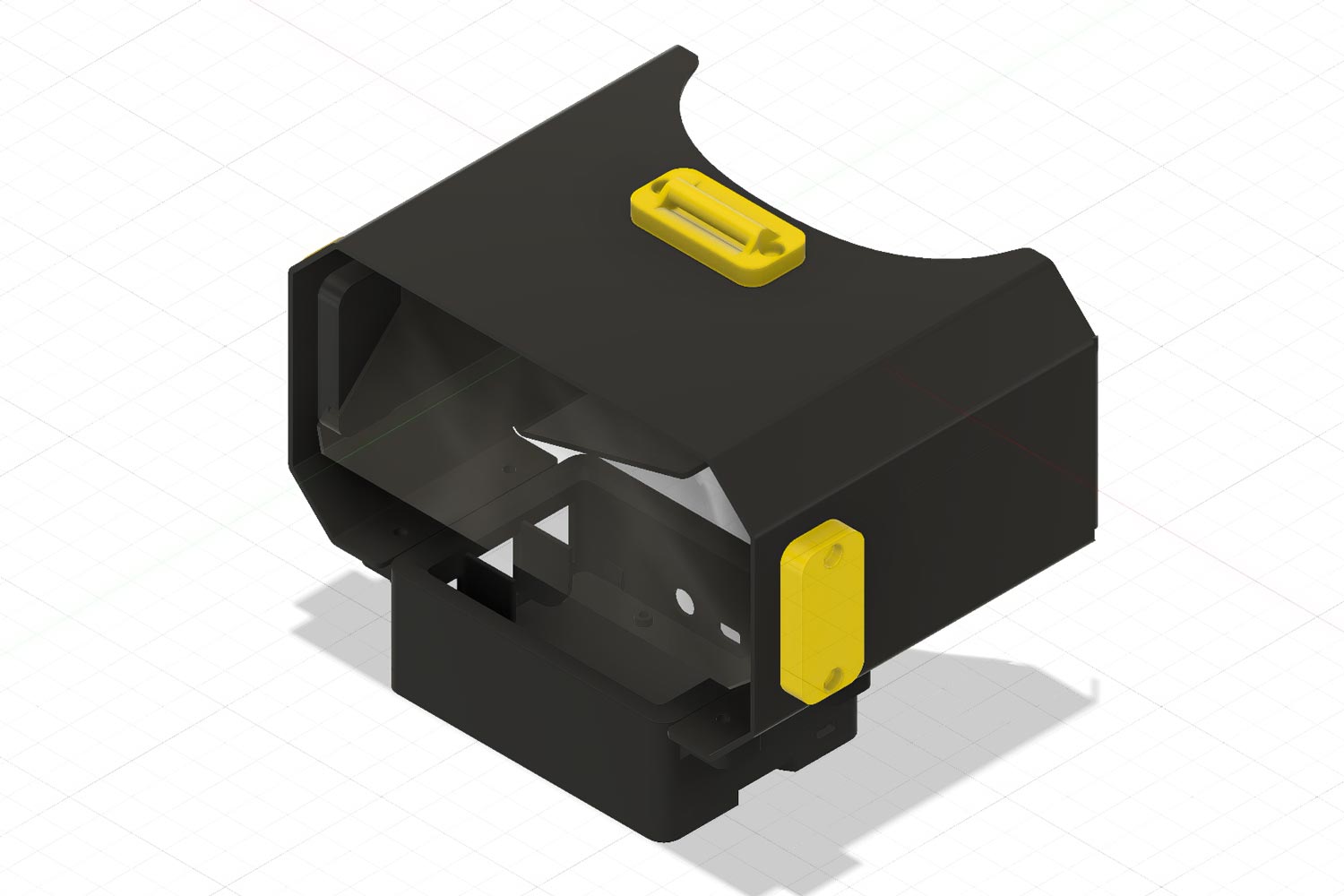

We set out to build a prototype that would bring this idea closer to reality. Our 3D-printed headset uses the so-called "Pepper's Ghost Effect", in which a pane of glass tilted at 45° reflects an image shown on a screen above or below the glass into the viewer's eyes, while letting light from the real world through. This technique is actually very old and has been used in theaters for a long time.

A RaspberryPI is mounted to the bottom of the headset with its screen facing upwards. Running a Python script that connects to various APIs, the little computer displays AI-generated approximated images of your surroundings on its screen. Through the tilted glass pane, the wearer can see the AI-generated image and the real world simultaneously.

The cover is mainly used to darken the area around the eyes. At the bottom is a pod in which both the RaspbberyPi and a 3.5 inch monitor shield are mounted. The headband is modeled after the one used on the MetaQuest 2 and is held to the main body by yellow clamps. It is adjustable in size.

The code

This is very similar to how Bjørn Karmann's AI camera works. The headset does not yet have a GPS module, which is why we had to use a little trick. The Python script scans for WiFi networks in the proximity. Based on their MAC addresses, the Google Geolocation API will return an approximate geo-location. We will further use these coordinates to collect more information such as a street address, the current weather, points of interest and more. We will also collect the current date and time. After collecting all this data, we can begin to write a readable sentence describing a photo taken at said location, where the weather is so and so, and x parks, restaurants, etc. are nearby. We also insert the time as a word (noon, dawn, morning, etc.) and the month to correctly identify the time of year. After the sentence is "written", it is passed to a Stable Diffusion API.

Stable Diffusion is a powerful generative AI tool that allows us to create images from text inputs. After we get the generated image, the RaspberryPi will display it on its screen using a simple tkinter interface.

Credits

Project created as part of Design Investigations at the University of applied Arts Vienna. Project created with Leo Hafele, Bjørn Ravlo-Leira and Johannes Mayer. Coding/ideation support by Grayson Earle. This project took heavy inspiration from Bjørn Karmann's AI camera Paragraphica.