Reality Goggles

Prototyping KI-gestützter AR-Brille zur Sichtbarmachung versteckter Architekturelemente im urbanen Raum.

Liminalität in der Stadtplanung

Wir alle kennen die Reise als einen Moment der Liminalität und des Übergangs. Sorgen sind schnell vergessen, bevor sie uns kurz vor der Landung wieder einfallen. Auf langen Zugfahrten können wir den Blick aus dem Fenster genießen, der uns Einblick in den Verlauf unserer Reise gibt. In urbanen Gebieten erleben wir jedoch architektonische Pragmatisierung, die zu Serialisierung führt. Ein Beispiel dafür ist die U-Bahn, die Fenster hat, durch die man nur die Tunnelwand sieht. U-Bahn-Stationen serialisieren die Stadt ebenfalls architektonisch und abstrahieren sie zu Namen auf Schildern.

Die Röntgenblick-Vision

Wir fragten uns, wie es sich anfühlen muss, in der U-Bahn zu sitzen, nach oben zu blicken und durch ausgefeilte Röntgentechnologie die Welt über sich zu sehen. All die Rohre, die durch den Boden verlaufen, geheime Keller und unentdeckte Welten, aber auch das bekannte Wien darüber. Man könnte wieder ein Gefühl des Reisens gewinnen. Natürlich bleibt Röntgenblick vorerst eine Vision. Aber nachdem wir Paragraphica von Bjørn Karmann gesehen hatten, dachten wir, dass wir mit Hilfe von generativer KI zumindest versuchen könnten, einen greifbaren Prototyp zu bauen, der dies mit heutiger Technik versucht.

Funktionsfähiger Prototyp

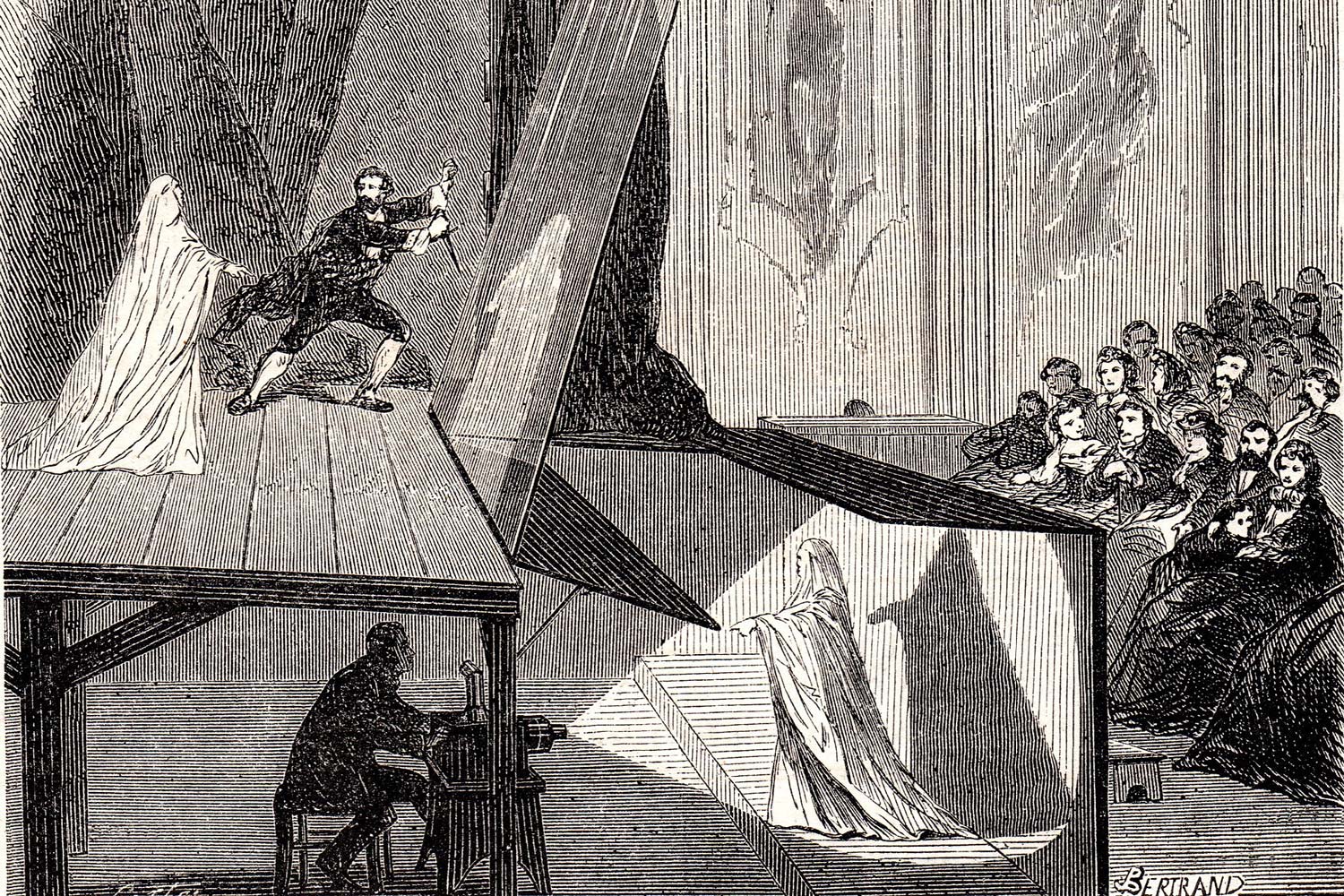

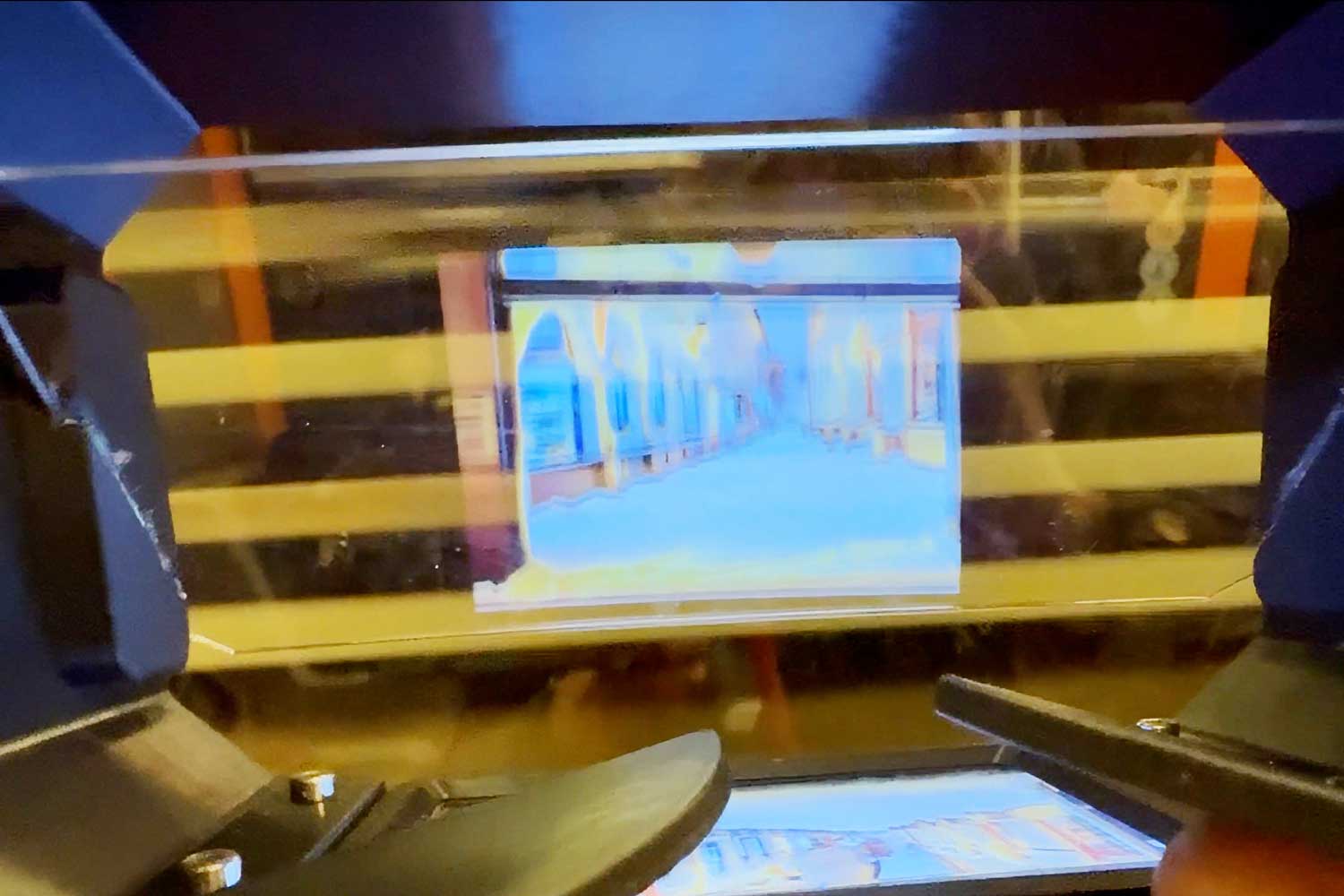

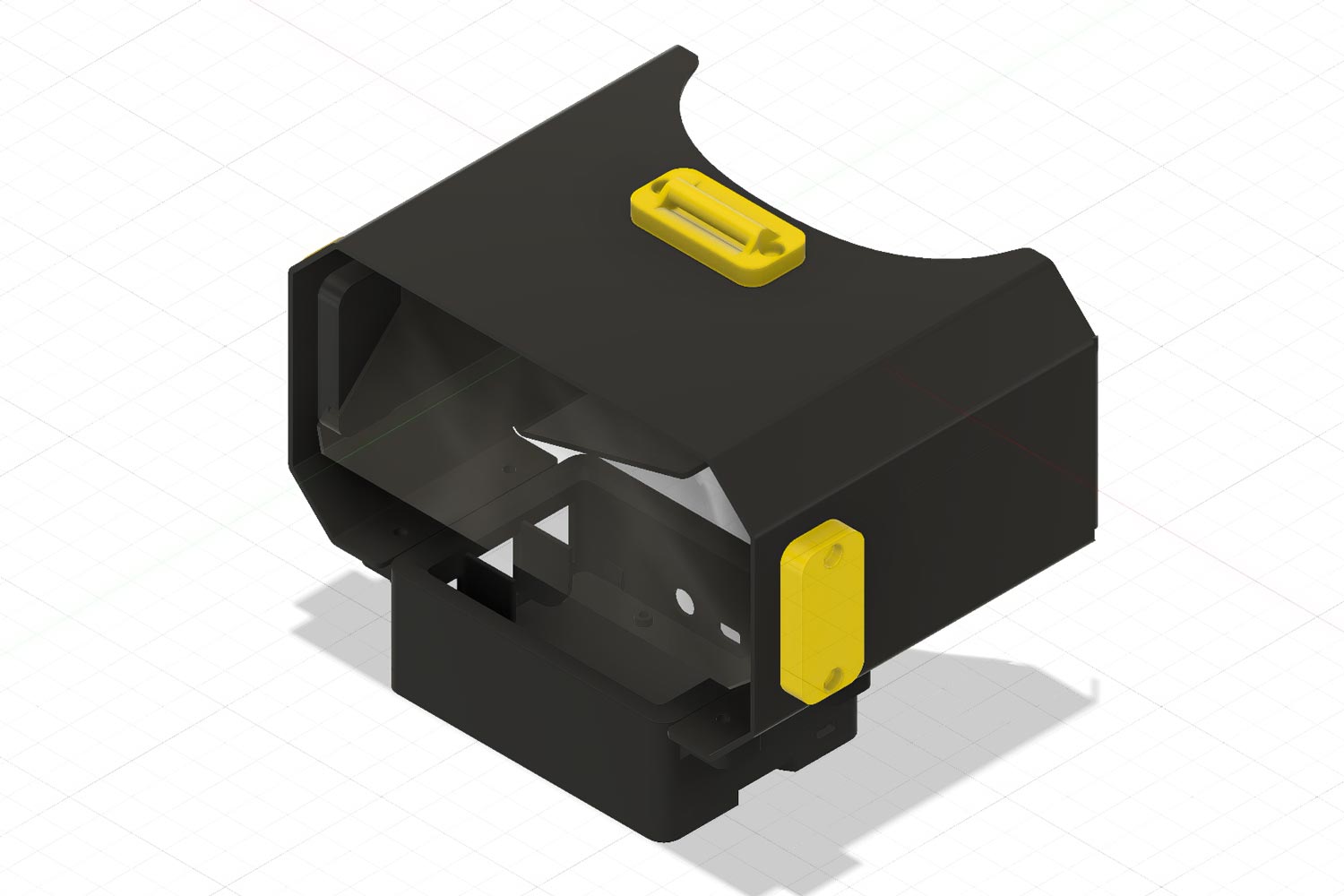

Wir machten uns daran, einen Prototyp zu entwickeln, der diese Idee der Realität näherbringt. Unser 3D-gedrucktes Headset nutzt den sogenannten »Pepper's Ghost-Effekt«, bei dem eine um 45° geneigte Glasscheibe ein Bild von einem darüber oder darunter liegenden Bildschirm in die Augen der Betrachtenden reflektiert und gleichzeitig Licht aus der realen Welt durchlässt. Diese Technik ist eigentlich sehr alt und wird schon lange in Theatern verwendet.

Ein RaspberryPI ist am unteren Teil des Headsets montiert, mit dem Bildschirm nach oben zeigend. Ein Python-Skript verbindet sich mit verschiedenen APIs, und der kleine Computer zeigt KI-generierte approximierte Bilder der Umgebung auf seinem Bildschirm an. Durch die geneigte Glasscheibe können die Träger·innen das KI-generierte Bild und die reale Welt gleichzeitig sehen.

Die Abdeckung dient hauptsächlich der Abdunkelung des Augenbereichs. Unten befindet sich ein Fach, in dem sowohl der RaspberryPi als auch ein 3,5-Zoll-Monitor-Shield montiert sind. Das Kopfband orientiert sich am MetaQuest 2 und wird durch gelbe Klammern am Hauptkörper befestigt. Es ist größenverstellbar.

Der Code

Das funktioniert sehr ähnlich wie Bjørn Karmanns KI-Kamera. Das Headset hat noch kein GPS-Modul, weshalb wir einen kleinen Trick anwenden mussten. Das Python-Skript scannt nach WiFi-Netzwerken in der Nähe. Basierend auf deren MAC-Adressen gibt die Google Geolocation API eine ungefähre Geo-Position zurück. Diese Koordinaten nutzen wir, um weitere Informationen wie Straßenadressen, aktuelles Wetter, interessante Orte und mehr zu sammeln. Auch das aktuelle Datum und die Zeit erfassen wir. Nach der Datensammlung schreiben wir einen lesbaren Satz, der ein Foto an besagtem Ort beschreibt, wo das Wetter so und so ist und x Parks, Restaurants usw. in der Nähe sind. Wir fügen auch die Zeit als Wort (Mittag, Morgengrauen, Morgen usw.) und den Monat hinzu, um die Jahreszeit korrekt zu identifizieren. Nachdem der Satz »geschrieben« ist, wird er an eine Stable Diffusion API weitergegeben.

Stable Diffusion ist ein mächtiges generatives KI-Tool, mit dem wir Bilder aus Texteingaben erstellen können. Nachdem wir das generierte Bild erhalten, zeigt der RaspberryPi es über eine einfache tkinter-Oberfläche auf seinem Bildschirm an.

Credits

Projekt entstanden im Rahmen von Design Investigations an der Universität für angewandte Kunst Wien. Projekt entwickelt mit Leo Hafele, Bjørn Ravlo-Leira und Johannes Mayer. Coding/Ideation-Support von Grayson Earle. Dieses Projekt war stark inspiriert von Bjørn Karmanns KI-Kamera Paragraphica.